Project Node.js Lambda & DynamoDB

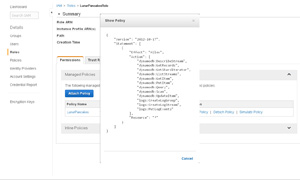

(Enlarge) |

|

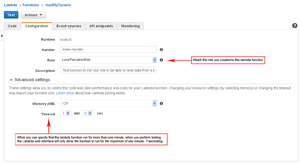

(Enlarge) |

|

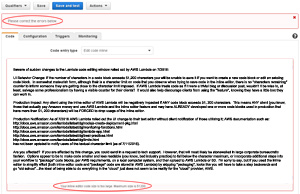

(Enlarge) |

|

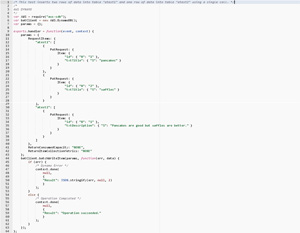

(Enlarge) |

|

|

|

|

|

|

(Enlarge) |

|

|

|

(Enlarge) |

|

(Enlarge) |

|

var AWS = require("aws-sdk");

var docClient = new AWS.DynamoDB.DocumentClient();

var params = {};

params = {

TableName: "NameOfTable",

Item: {

ID: 123,

AddedBy: "pancakes",

Info: "Some sort of text to be added."

}

};

docClient.put(params, function(err, data) {

if (err) {

/* Generate Response */

context.done(

null,

{

"Error": JSON.stringify(err, null, 2)

}

);

}

else {

/* Generate Response */

context.done(

null,

{

"Success": "You have putted, but not a golf put."

}

);

}

});

|

|

data.Items.forEach(function(row) { ServiceSummary_raw = row.ServiceSummary; }); |

|

ServiceSummary_new = [];

for (var x = 0; x < ServiceSummary_raw.length; x++) {

var blurbtext = ServiceSummary_raw[x];

if (x === 1) {

blurbtext = "[NOTE] " + blurbtext;

}

/* Save the strings to the new list (aka array) which contains the change */

/* It is implied each string value would take the form of {"s": "string text"}. I've added toString() for clarity. */

ServiceSummary_new[ServiceSummary_new.length] = blurbtext.toString();

}

|

|

params = {

TableName: "MyDynamoTable",

Item: {

"RowID": Some-row-id-in-table,

"ServiceSummary": ServiceSummary_new

}

};

docClient.put(params, function(err, data) {

if (err) {

/* Error occurred */

}

else {

/* Execution completed okay */

}

});

|

|

(Enlarge) |

|

(Enlarge) |

|

|

|

|

|

|

|